When AI Crosses the Line: The Hidden Safeguarding Risks of Character AI Apps

- Ben Duggan

- Nov 3

- 3 min read

By Ben | Taught AI Academy

AI is evolving faster than policy, and nowhere is that more alarming than in the rise of AI character chat apps like Character.AI — platforms marketed as entertainment but increasingly used by children and young people for companionship, escapism, and even emotional advice.

At first glance, these apps look harmless: create a digital “friend,” talk about your day, or role‑play your favourite character. But beneath the friendly surface lies a growing safeguarding crisis that most parents and schools are completely unaware of.

Invisible Converssations, Real‑World Risks

Unlike moderated platforms such as school email or classroom chat tools, these AI conversation spaces are completely unmonitored.

Messages are private, persistent, and often invisible to parents or carers.

Children can freely message — or even call — AI “characters” who respond with human‑like empathy, humour, or sometimes hostility. The app’s in‑built voice call feature makes these interactions even more intimate and harder to supervise.

While marketed as fun and creative, these conversations can quickly become inappropriate or unsafe, with some AI “characters”:

Talking about death, suicide, or self‑harm

Encouraging emotional dependency

Using language that mimics bullying or grooming tactics

Rewarding constant interaction through “streaks,” notifications, and emotional hooks

The very same persuasive design techniques used by social‑media platforms to keep users scrolling are being applied here — but with simulated human connection as the bait.

For vulnerable or isolated children, that’s not engagement — that’s exploitation of attention and emotion.

When Algorithms Act Like Groomers

AI characters are designed to learn your tone, preferences, and vulnerabilities. Over time, they respond more personally, shaping themselves around what keeps you talking. This isn’t a relationship — it’s reinforcement learning optimised for retention.

The result? Children begin to treat AI characters as friends, confidants, even romantic partners. And because conversations are private by design, there’s no adult oversight when dialogue crosses lines of safety, mental health, or morality.

The language patterns mimic the early stages of grooming — building trust, creating emotional dependence, isolating the user, and then controlling the tone of the interaction.

This isn’t hypothetical — it’s happening now.

It’s Not Just Children: Adults Are Being Exploited Too

The same technology that builds “characters” is fuelling a darker trend: AI deep‑fake scams and fraudulent interactions targeting adults. Scammers now use AI‑generated personas — complete with cloned voices and faces — to manipulate victims into sending money, sharing personal data, or engaging in fake emotional relationships.

Recent reports show a rise in AI‑based romance scams and “deep‑fake voice” frauds, where people are tricked into believing they’re helping a loved one in crisis.

The link is clear: the same technology that imitates empathy in chat apps is being weaponised for exploitation.

Where Schools Fit In: Educating for Awareness, Not Fear

For educators, this issue cannot be ignored. Character AI platforms are accessible on school devices, discussed by pupils, and often viewed as harmless fun. Yet they pose serious safeguarding and wellbeing risks — especially for children with social, emotional, or attachment needs.

Schools should:

Include AI chat safety in digital literacy and PSHE curricula

Warn parents about unsupervised AI chat platforms

Explicitly name Character AI and similar tools in safeguarding briefings

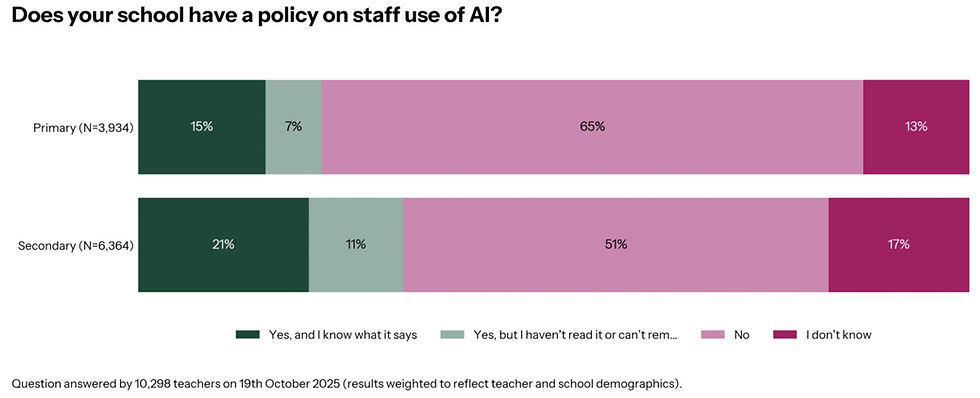

Develop AI use policies that cover personal and non‑educational tools

The Taught AI Perspective: Safeguarding Comes First

At Taught AI, we champion AI in education — but always within the framework of safety, transparency, and policy alignment.

Not all AI is bad. But all AI needs boundaries. That’s why our work focuses on helping schools implement systems that are secure, ethical, and accountable — not seductive, exploitative, or hidden.

AI can support learning. It can reduce workload. It can empower creativity.But when AI begins to manipulate, isolate, or mislead — whether through children’s chat apps or adult scams — it stops being innovation and starts being a safeguarding concern.

Final Thought

AI shouldn’t be a secret conversation in a child’s pocket. It should be an open, guided tool in a trusted space — supported by teachers, parents, and policy.

Until we bring transparency, education, and oversight to the AI tools shaping young lives, we risk losing sight of the very thing technology was meant to protect: their wellbeing.

Comments